How to Use Papagayo-NG in Synfig Studio for Lip-Sync Animation

Lip Synchronization in animation is the art of making an animated character speak with pre-recorded dialogue. This process involves actual animation of the mouth with dialogue and speech timings. This Lip sync animation makes your character come alive.

The lip syncing process in animation was done frame by frame method in the olden days. This method takes more time and requires a skilled animator to create the lip syncing process in the animation.

But due to the development of computer technology and software, the Lip synchronization in animation now quick easy and saves time. The Synfig studio animation software has auto lip-Sync feature that helps the animator to complete the process with less effort.

This synfig studio uses Papagayo-NG software that helps to control speech timings and to create sound layer. This sound layer contains set of vocal folders according to the background voice. This open source Papagayo-NG software was developed by Lost Marble.

Below are three key steps that will help the animator to create lip synchronization in animation with Synfig studio software.

1. Creating Mouth Shapes in Synfig Studio

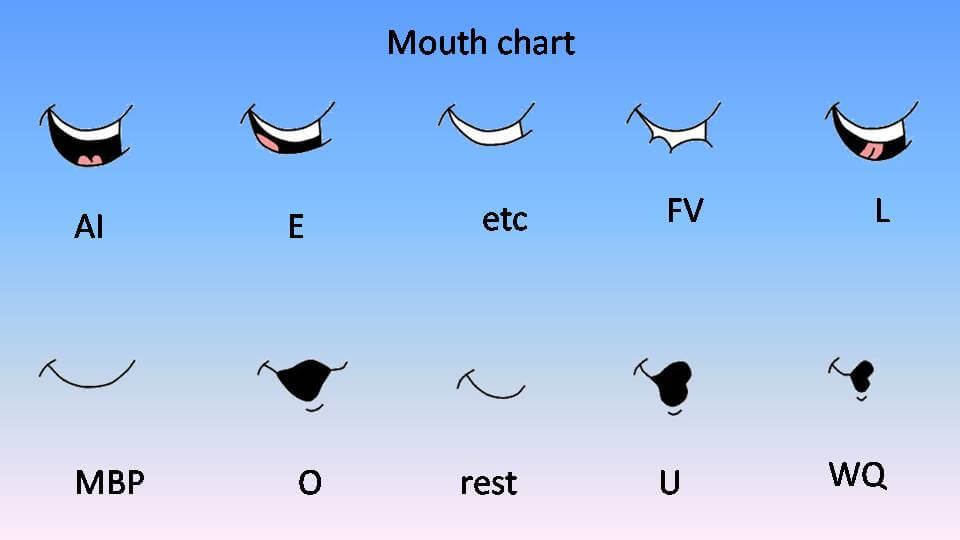

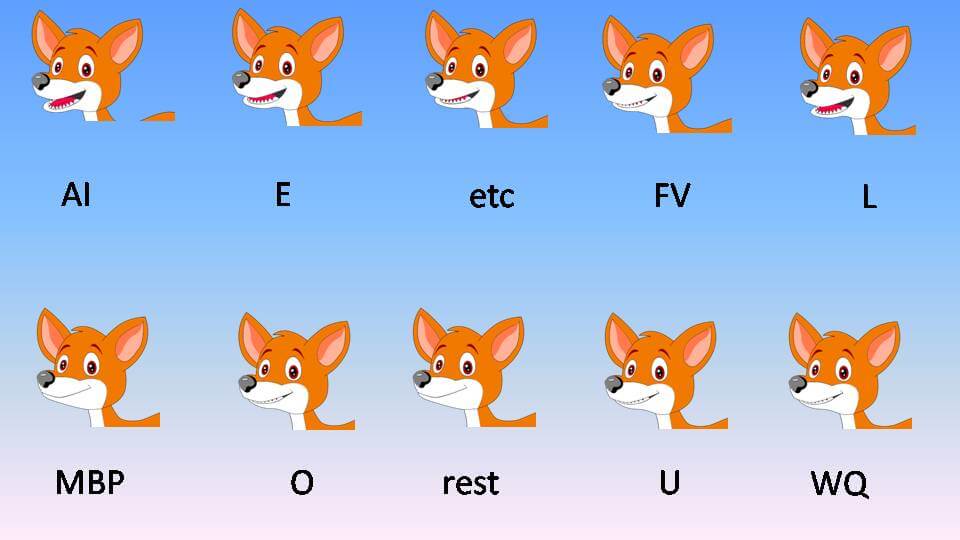

The lip synchronization process requires different mouth shapes according to the phonetic syllable. So animator need to draw the different mouth shapes as per mouth chart shown below.

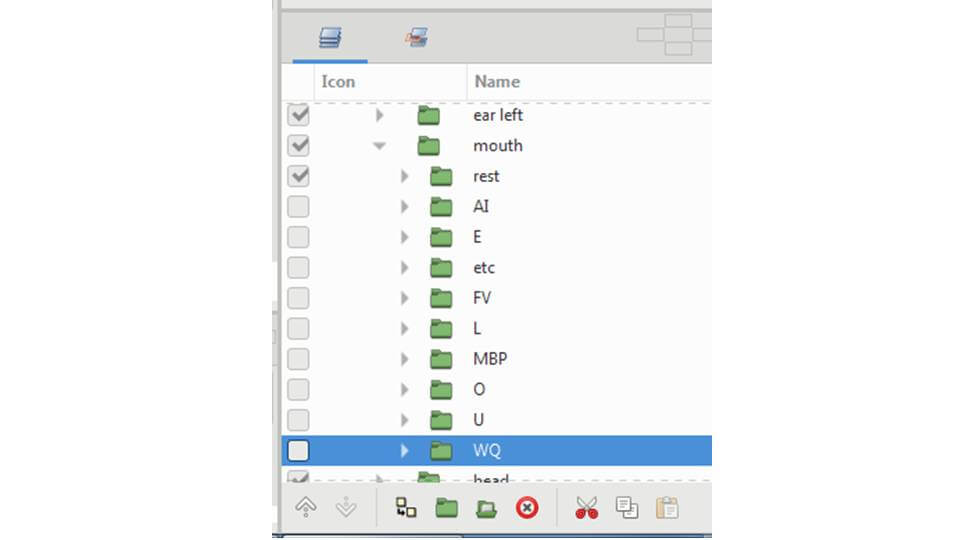

The drawn mouth shapes should be named as “AI, E, rest, etc, FV, L, BP, O, Q and WQ” for easy understanding. This naming method will help you later. The character with different set of mouth shape should be used during dialogue animation.

2. Controlling speech timings and creating sound layer

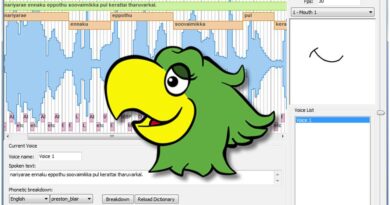

The Papagayo-NG software is used for controlling speech timing and creating sound layer. The user interface of this software is very simple and easy to understand. Below is the simple procedure that helps you to create sound layer.

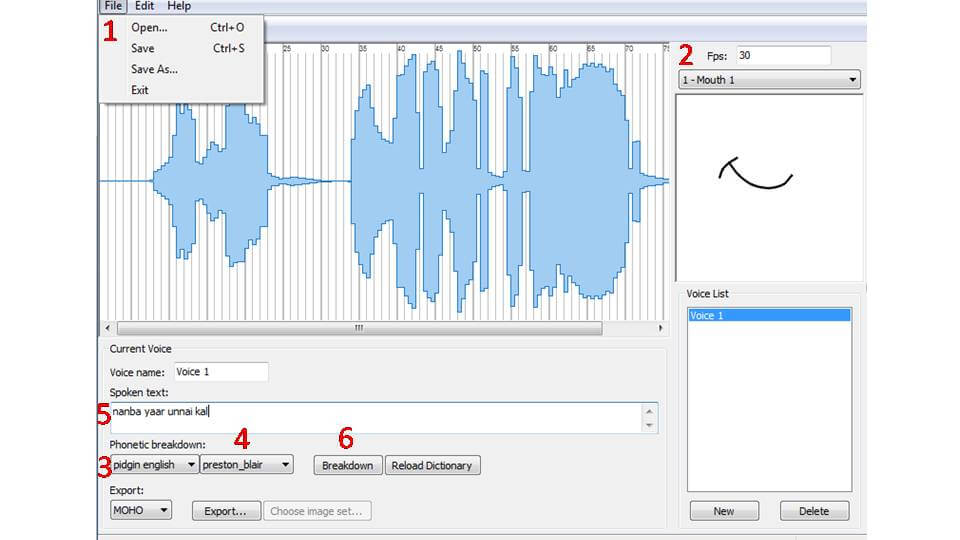

1. Import the pre-recorded dialogue file by “File-open” option. Papagayo-ng supports mp3 and wav audio file formats.

2. Select the type of mouth chart as shown in image.

3. Choose your language in phonetic breakdown. This software supports the languages like English, german, french, finnish, dutch, italian, russian, spanish, swedish and more. You can choose pidgin english language if language support is not available.

4. Choose Preston blair or Flemming Dobbs phoneme set as per your need.

5. Type the dialogue into word form in the selected language under the spoken text box as shown in the image. You can type english phonetic letters if you chosen pidgin english as language.

6. Now select the breakdown option. This helps to make sentence track and word track on the audio working area.

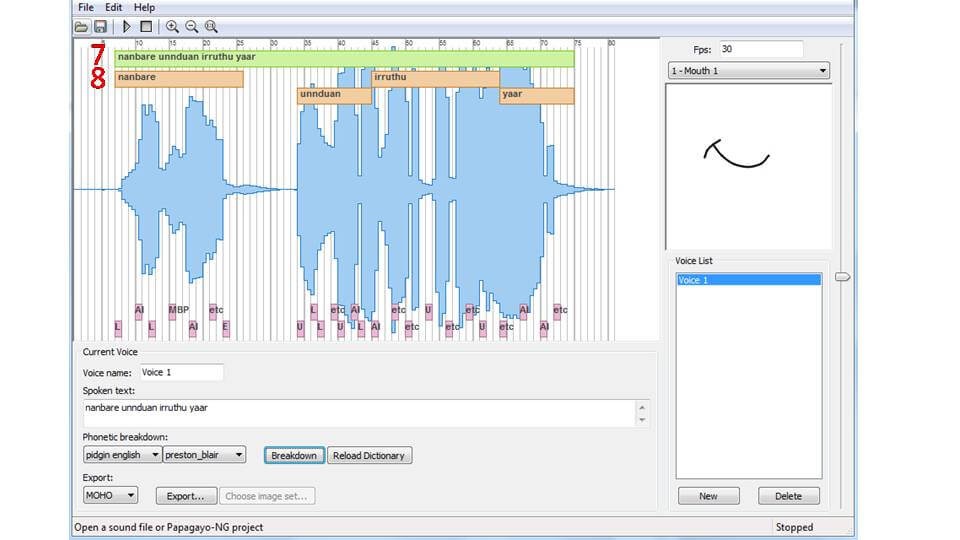

7. Now you can control the speech timing by dragging sentence track and word track in audio working area.

8. Once control of speech timing is finished, save this file in .pgo format using the save option in the file menu. This pgo file contains sound layer for lip synchronization.

Please note that, the .pgo file and pre-recorded audio file must be in the same folder.

3. Implementing sound layer in the scene

You must set up the background and characters in the synfig studio before implementing the sound layer. Please note that,the frame length of animation should be greater than frame length of pre-recorded audio. This frame length can be controlled by time option in canvas properties.

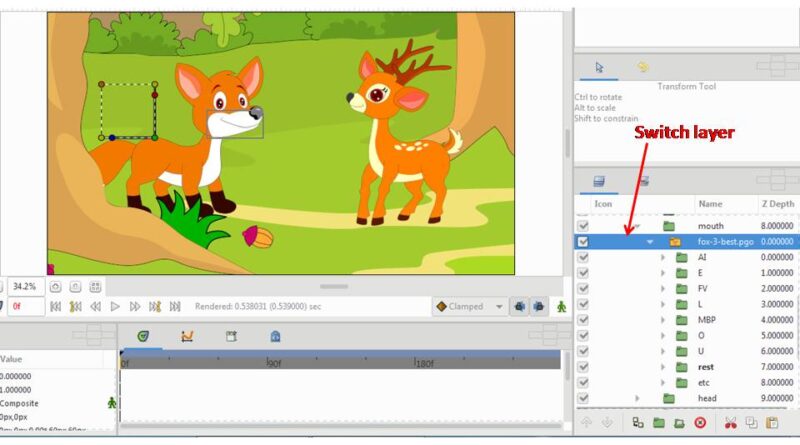

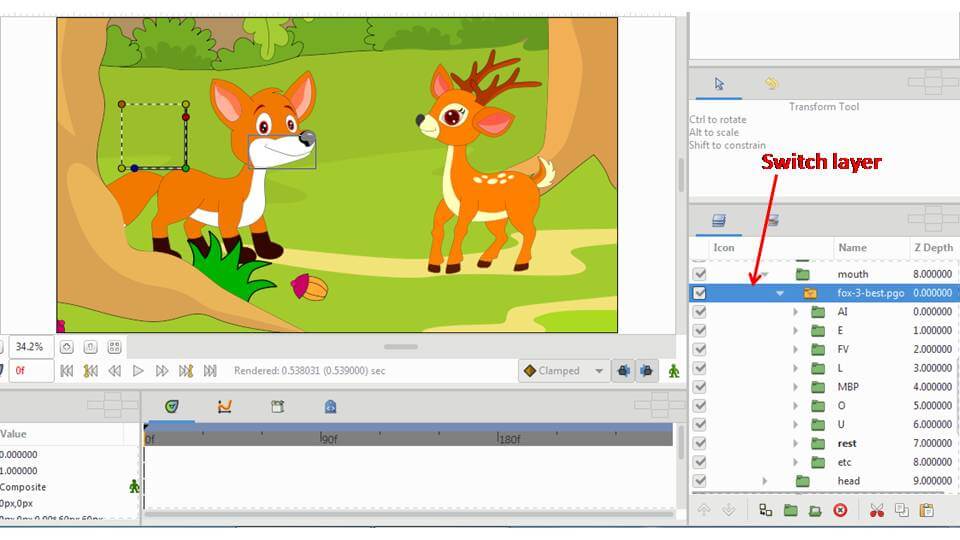

1. Now you can import the pgo file using file-import option. This process creates sound layer and switch layer on the layer tree. This switch layer contains empty phonetic folders named AI, E, rest, etc, FV, L, BP, O, Q and WQ.

2. This switch layer should be drag and drop to inside of character’s mouth layer, where the different set of mouth shapes drawn earlier.

3. Now you need to drag the drawn AI folder (that contains drawings of mouth shape) and place inside the switch AI folder. Repeat the same drag and drop process for remaining E, rest, etc, FV, L, BP, O, Q and WQ folders.

4. Now you can preview the lip sync animation using preview button. Sometimes the lip sync might be faster during animation. So you can add the stroboscope layer above the switch layer. This layer helps to control the speed of lip sync in the animation by changing the frequency in the parameter option. The frequency value must be 10 to 15 for better experience.

The above procedure helps you to animate the lip synchronization according to the pre recorded audio. This auto lip sync process makes accuracy on speech timings. The papagayo-NG and synfig studio software helps to make quick and easy for animation of lip sync.

You can also read the review of Synfig studio software here to get more understanding.